Open-source AI Must Disclose Training Data: A New Era of Transparency

The Open Source Initiative (OSI) has taken a bold step in defining standards for open-source artificial intelligence (AI), explicitly mandating the disclosure of training data. With growing scrutiny over corporate practices, Meta’s AI model, Llama, has been challenged for not meeting these standards, foregrounding a critical debate about transparency in the tech industry.

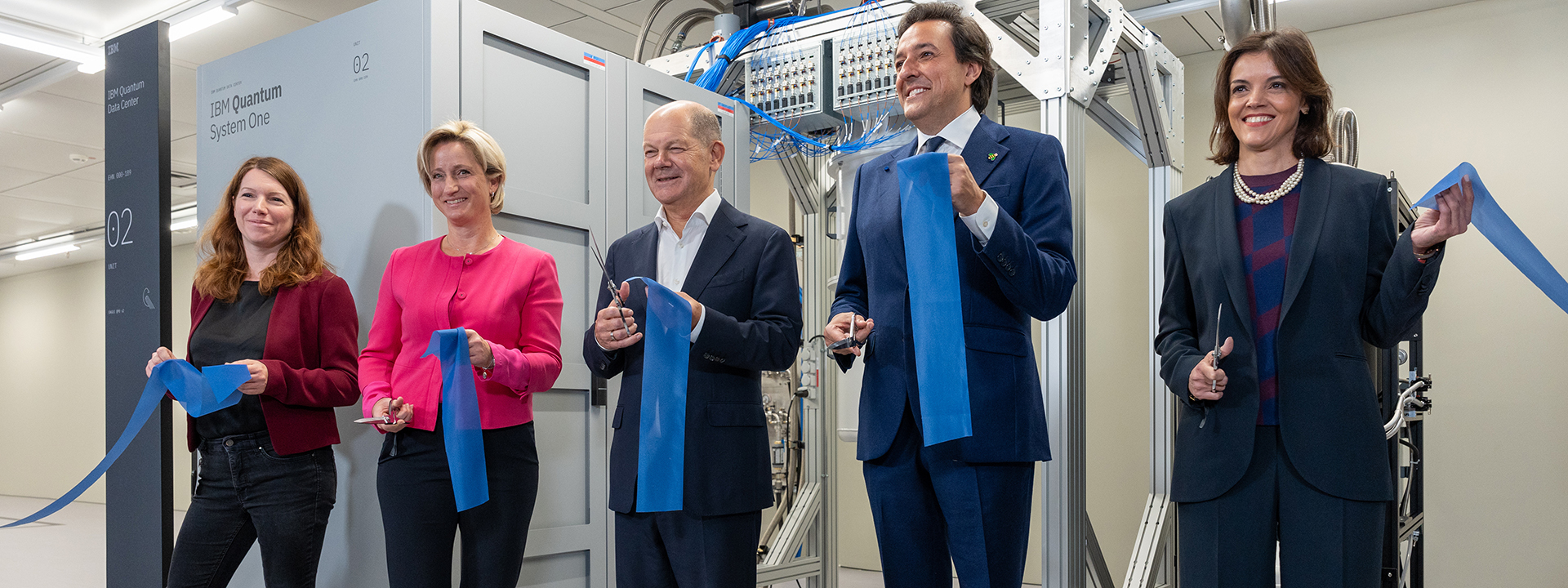

The balance between innovation and transparency in AI development.

The balance between innovation and transparency in AI development.

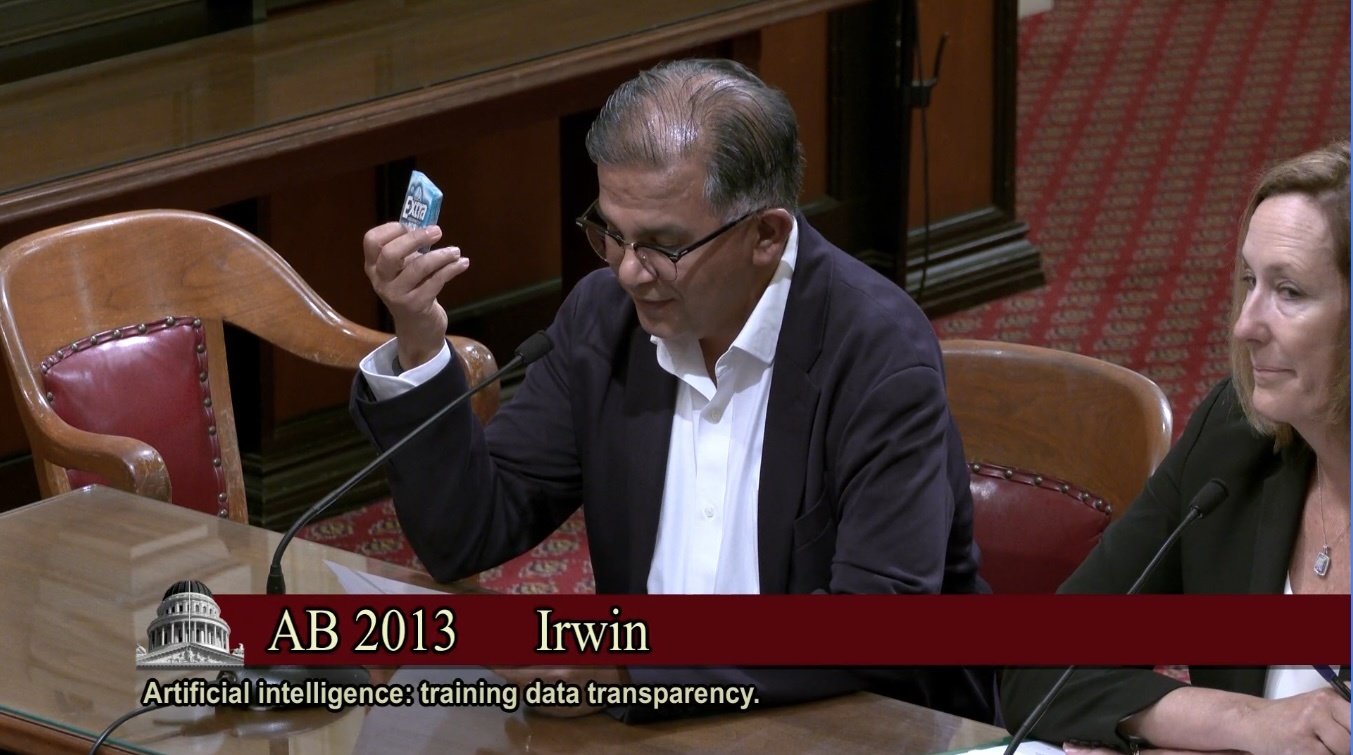

Understanding OSI’s New Definition

The OSI has released a comprehensive definition for what constitutes open-source AI, igniting discussions across the tech landscape. According to this new definition, a transparent AI system must not only provide access to the underlying code but also disclose the data utilized for training. This initiative emphasizes the importance of making AI systems not just tools but accountable entities.

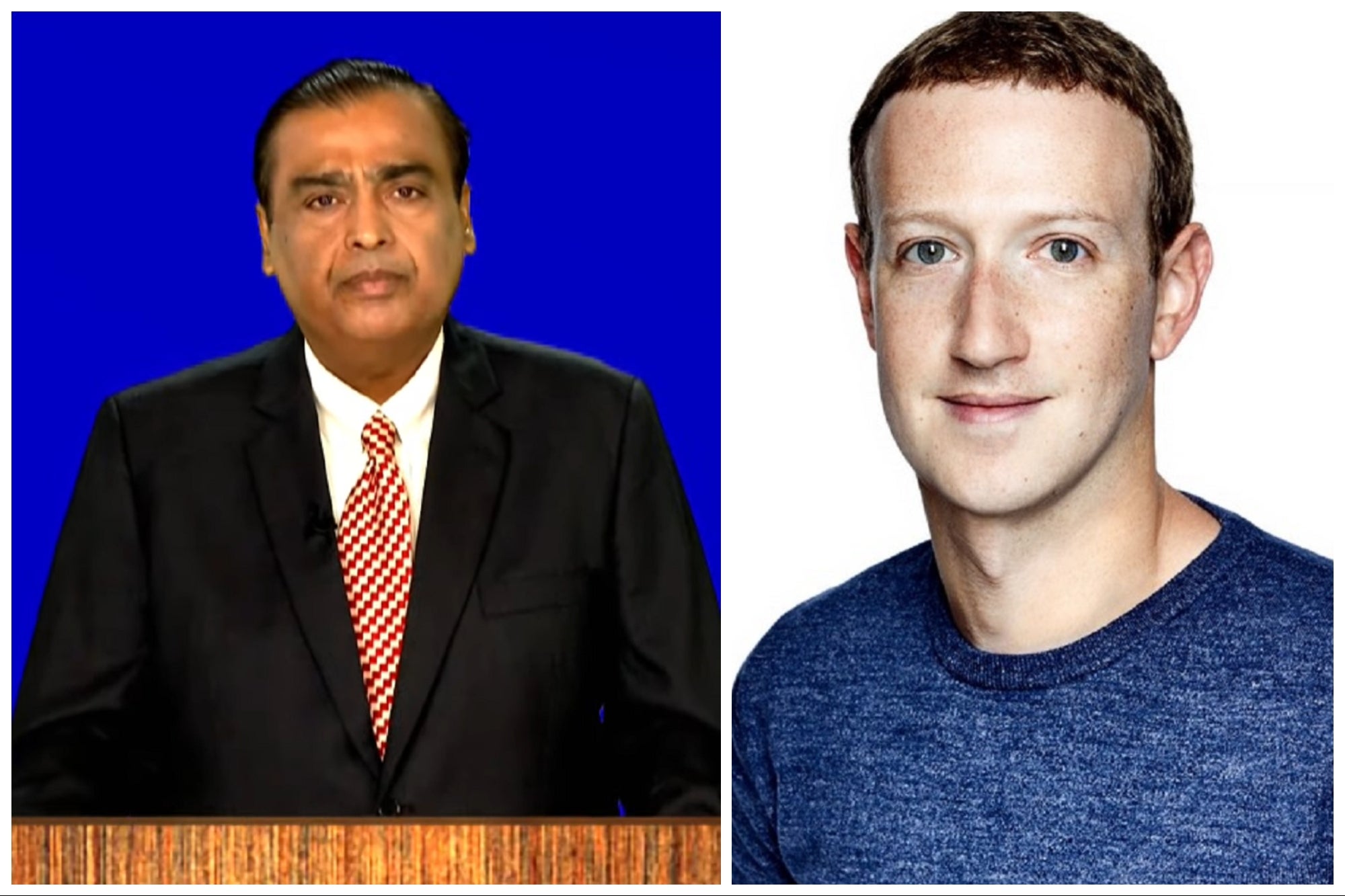

Meta’s Dilemma

Despite Llama being branded as the largest open-source AI model, it doesn’t align with OSI’s newly established guidelines. While the model is available for public download, it imposes restrictions on commercial use—that is, applications with over 700 million users are excluded from leveraging Llama without constraints. This limitation raises significant questions regarding access and modification rights that are essential to open source.

Meta’s reluctance to share comprehensive training data is justified by concerns over safety and ethical implications. However, many observers argue that this anxiety cloaks a desire to maintain a competitive edge akin to Microsoft’s historical resistance to open-source paradigms during the tech revolution of the 1990s.

The Industry’s Response

Reactions within the industry have been telling. Meta’s spokesperson, Faith Eischen, noted the difficulties in defining open source AI amidst rapidly evolving technologies. She claimed that Meta remains committed to enhancing AI accessibility in collaboration with OSI and various industry partners. Yet, critics caution that such collaborations may well be a facade to shield proprietary interests rather than promote genuine transparency.

Collaboration in AI development must prioritize transparency and user rights.

Collaboration in AI development must prioritize transparency and user rights.

An Essential Shift

The growing emphasis on transparency in AI is echoed by figures like Simon Willison, an independent researcher who insists that OSI’s definition is a crucial antidote against companies that misrepresent their technologies as open source. This sentiment is shared by Clement Delangue, CEO of Hugging Face, who applauds OSI’s initiative as vital for fostering real discussions around openness in AI, particularly concerning the influence of training data in the creation of AI models.

What Lies Ahead

As discussions continue, the challenge remains: how to reconcile innovation with compliance under the umbrella of open-source principles. The OSI’s strong stance highlights a crucial tension in the industry. While companies like Meta argue for proprietary control under the guise of safety and security, advocates of open source assert that transparency fosters trust and accountability.

Critically, OSI’s expectations could serve as a rallying point for developers, organizations, and regulators advocating for an authentic commitment to openness.

“In the landscape of artificial intelligence, transparency isn’t just a regulatory checkbox; it’s the cornerstone of innovation and ethical responsibility.” — An advocate for open-source practices.

In summary, the call for open-source AI to disclose training data isn’t merely a technical stipulation—it represents a philosophical shift toward a more collaborative and transparent future in technology. As the industry reckons with these demands, it remains to be seen whether tech giants like Meta will adapt or dig in their heels, potentially stymieing broad-scale innovation.

The future of AI hinges on transparency and ethical practices.