The HBM Adventure: Scaling New Heights in Memory Technology

In our relentless pursuit of better memory capacity and bandwidth, particularly for AI and high-performance computing (HPC), discussions have come to center around High Bandwidth Memory (HBM). Recent dialogues with experts from top companies have underscored a critical point: any advancements must be scalable and cost-effective. Without these prerequisites, promising innovations risk becoming unrealistic dreams.

The HBM Bottleneck

As it stands, we’ve hit a bottleneck in HBM deployment. While HPC and AI workloads increasingly demand more memory bandwidth, the supply is painfully lean. Only a select few high-end computers can afford the luxury of HBM, yet they often struggle with severe memory capacity limitations. The truth is, HBM shines in terms of bandwidth compared to conventional DRAM and even GDDR but does so at a steep price tag, leaving many users grappling with insufficient memory bandwidth.

“When doubling up the memory on the same GPU delivers nearly 2X performance on AI workloads, the memory is the problem!”

This observation encapsulates the crux of the issue: for many applications, it’s not merely about improving GPU speed but instead boosting memory to support those powerful processors.

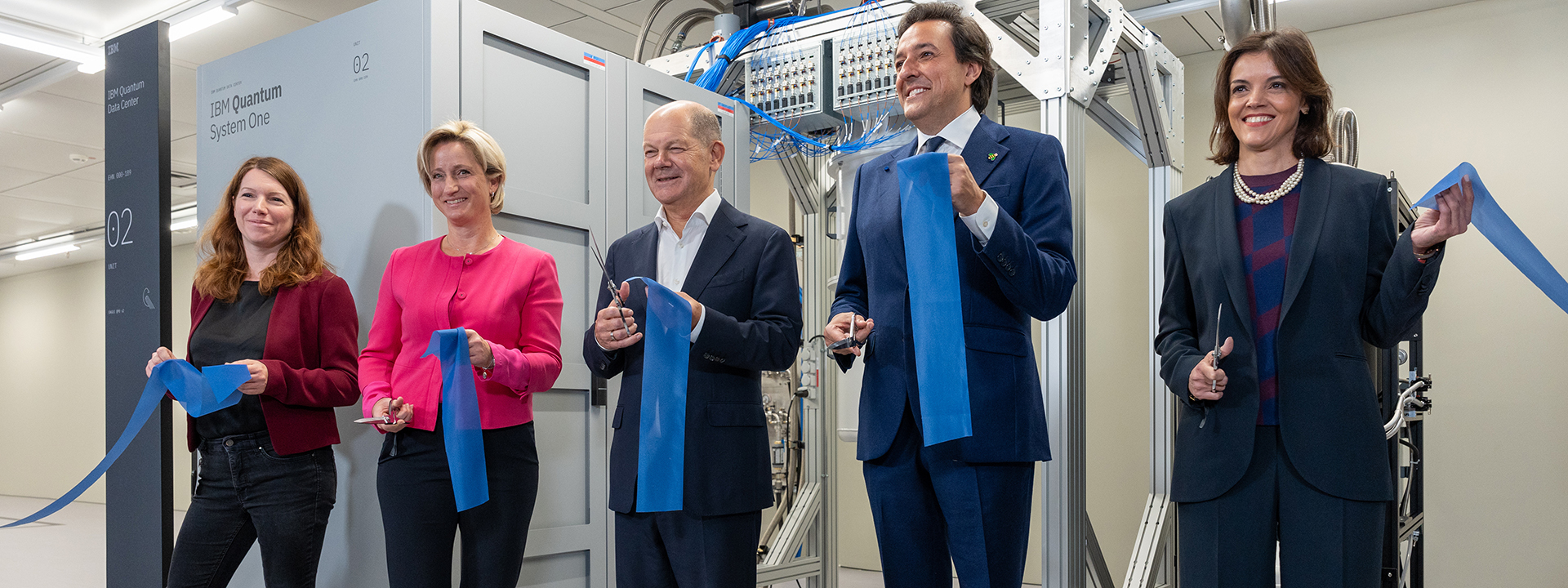

Innovative memory solutions promising to change the landscape.

Exciting Developments from SK Hynix

The landscape shifted recently with two significant announcements from SK Hynix, a dominant player in the HBM sector. During the SK AI Summit in Seoul, optimistic news emerged regarding a new variant of HBM3E memory. Under the spotlight was a promising height increase in memory stacks—from eight chips high to a staggering sixteen! With its new 24 Gbit memory chips capable of delivering 48 GB per stack, this advancement could reshape the memory storage capabilities of future compute infrastructures.

Current configurations yield a maximum of 36 GB with existing HBM3E technologies, but the push beyond to these taller stacks holds transformative potential for autonomy in memory scaling.

High Stacks and Performance Enhancements

These taller stacks aren’t just about numbers—they’re also linked to considerable performance boosts. According to Kwak Noh-Jung, CEO of SK Hynix, we’re looking at an 18% improvement in AI training performance and a 32% enhancement for inference tasks with the new HBM3E. It’s worth noting, though, that the metrics behind these assessments remain vague.

Cutting-edge advancements in memory technology pave the way for growth.

Yet, while we can celebrate these milestones, there’s cautious optimism from both clients and manufacturers. Are we truly prepared for the leap from HBM3E to HBM4? With the market’s appetite for more capacity and efficiency continuing to grow, we can only hope these innovations roll out smoothly.

The Road to HBM4

With the continued technological evolution, now is a prime opportunity to review the exciting yet formidable HBM roadmap. Since the launch of HBM1 back in 2014, each iteration has marked notable improvements. HBM2 and HBM2E laid foundational advancements, but many will agree, the unveiling of HBM3E and its promising specifications dazzled audiences as bandwidth milestones pushed expectations further.

The future holds even more excitement. HBM4 is slated for potential release between 2026 and 2027, projected to feature 1.4X the bandwidth and improved chip capacity. This leap seems essential, especially when considering the rampant demand for memory in today’s generation of AI-enhanced applications.

Looking forward: The evolution of memory technology.

Balancing Act: Performance and Power

As compute engine manufacturers gear up to meet these heightened demands, there’s a delicate balance that must be struck. The pressure is mounting to increase performance while simultaneously lowering power consumption. With recent GPUs reaching staggering wattages—up to 1,200 watts for the Blackwell B200 accelerator—requirements to manage thermal constraints and overall efficiency are more pressing than ever.

As quoted in previous talks, “We want a little red wagon, a sailboat, a puppy, and a pony for Christmas too, but just because you put it on your wish list, doesn’t mean you will get it.” This sentiment rings true in the high-stakes memory game.

Looking Ahead

The push for high-capacity HBM is an ongoing journey of innovation, facing challenges on multiple fronts—from yield concerns during production to advancements in thermal management technology. The future landscape may boast 1 TB of memory per compute engine, revolutionizing the industry once again.

As we delve deeper into the realms of AI and HPC, the role of high bandwidth and memory capacity becomes undeniably crucial. It will be fascinating to see how upcoming developments from SK Hynix and others play out, particularly on advancements that can keep pace with the voracious demands of the compute engines of tomorrow.

So, as we sit at the brink of these revolutionary advancements in memory technology, questions linger: Are we ready? What innovations lie just over the horizon?

In concluding our exploration, we can’t help but contemplate one fundamental truth—that today’s memory landscape needs urgent revitalization before future innovations can flourish. Strategies that involve developing HBM4E memory systems well ahead of schedule may yield solutions that align better with our current compute needs rather than waiting until 2026.